Turnitin AI detection isn’t always accurate. Brophy has put in place new policies to combat this problem.

According to Turnitin, their AI detection tool had a 1% false positive rate. They also claim a 98% accuracy in identifying AI-generated text, however, an independent study puts this number at 100% for 126 documents.

This accuracy has not been seen everywhere. For example, St. Joseph’s University found that it was only 50-75% accurate and had a lot of false positives. They said that it was causing more harm than good.

“It was causing more problems than it was solving because there is no real way to say, ‘yes, this was written by artificial intelligence,’” said Mrs. Andy Starr, the Manager of Academic Systems at St. Josephs.

Another example is a writer at the University of Maryland finding that Turnitin identified half of the 16 papers incorrectly.

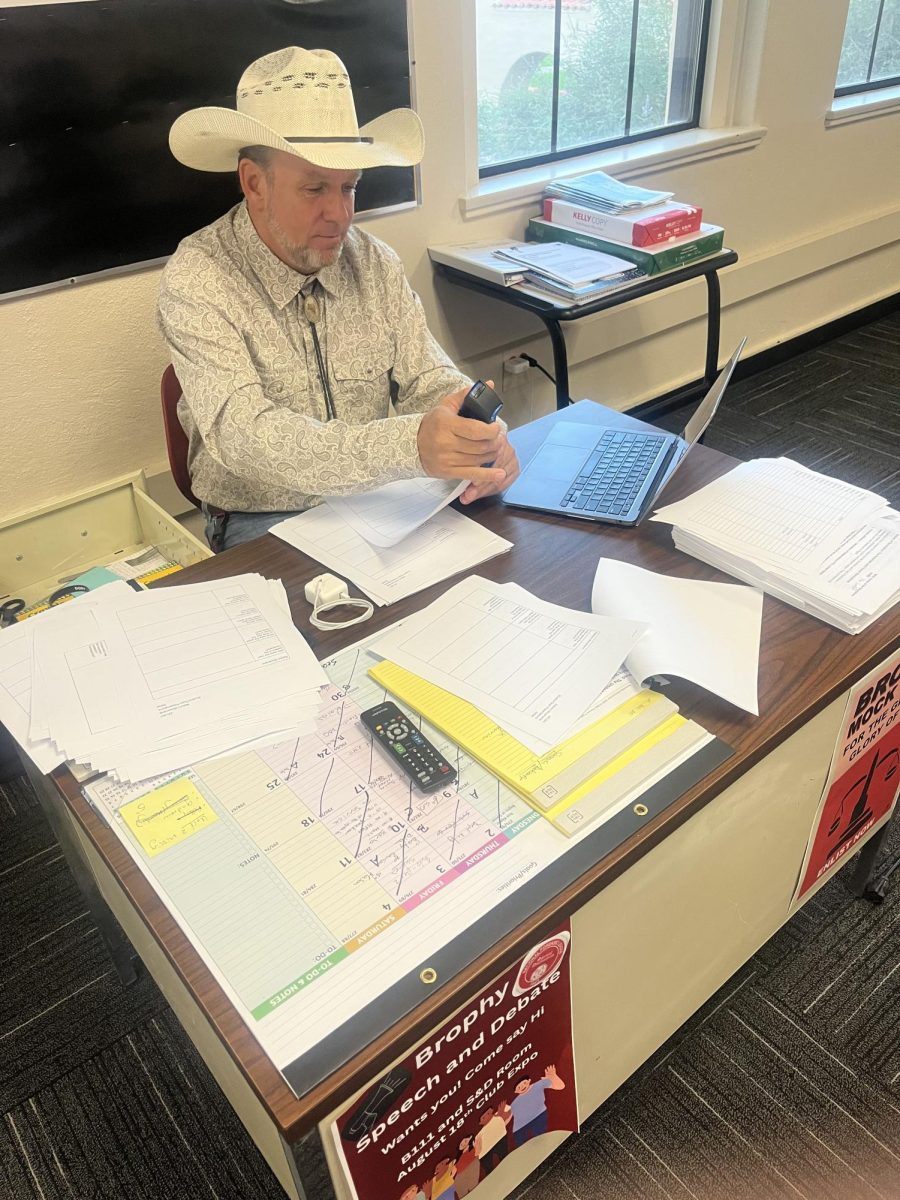

Brophy understands the shortfalls of Turnitin AI detection.

“I don’t think there is an AI detection tool out there right now that is anything than unreliable at best” said Mr. Mica Mulloy ’99, the assistant principal of instruction and innovation, “There have been plenty of cases where the detection tool flagged something that was absolutely AI-generated. There have also been cases where it has missed something.”

This can be a problem as students point out that AI is still used for plagiarism. For example, in response to a question if students at Brophy use AI for assignments, Isaac Peterson ’27 said, “Small assignments yes, large assignments no.”

To combat this they are implementing the start of a conversation approach. This approach focuses on going through the student’s writing process and looking through the google doc if something is flagged or suspected as AI-created.

This is part of a larger problem plaguing universities and schools across the country. Brophy has made changes to their AI policy such as the start of a conversation to combat this.